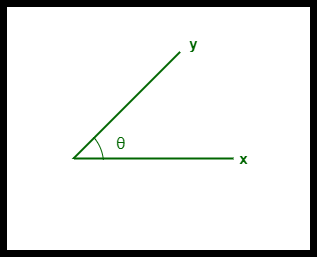

The cosine measure similarity is another similarity metric that depends on envisioning user preferences as points in space. I found the following explanation in the book Mahout in Action very useful. Refer to the link for detailed explanation of cosine similarity and vector space model. This was the reason why we used TF and IDF to convert text into numbers so that it can be represented by a vector.

In this example we are dealing with text documents. Using the formula given below we can find out the similarity between any two documents. The set of documents in a collection then is viewed as a set of vectors in a vector space. If you need some refresher on vector refer here. Step 4: Vector Space Model – Cosine Similarityįrom each document we derive a vector. Given below is TF * IDF calculations for life and learning in all the documents. In Document1 for the term life the normalized term frequency is 0.1 and its IDF is 1.405507153.

Remember we are trying to find out relevant documents for the query: life learningįor each term in the query multiply its normalized term frequency with its IDF on each document. Return 1.0 + log(float(len(allDocuments)) / numDocumentsWithThisTerm) NumDocumentsWithThisTerm = numDocumentsWithThisTerm + 1 If term.lower() in allDocuments.lower().split():

#COSINE SIMILARITY CODE#

Given below is the python code to calculate IDFĭef inverseDocumentFrequency(term, allDocuments): Since the terms: the, life, is, learning occurs in 2 out of 3 documents they have a lower score compared to the other terms that appear in only one document. Given below is the IDF for terms occurring in all the documents. There are 3 documents in all = Document1, Document2, Document3 Let us compute IDF for the term game IDF( game) = 1 + log e(Total Number Of Documents / Number Of Documents with term game in it) Logarithms helps us to solve this problem. We need a way to weigh up the effects of less frequently occurring terms. Also the terms that occur less in the document can be more relevant. We need a way to weigh down the effects of too frequently occurring terms. In fact certain terms that occur too frequently have little power in determining the relevance. In the first step all terms are considered equally important. The main purpose of doing a search is to find out relevant documents matching the query. Return unt(term.lower()) / float(len(normalizeDocument)) NormalizeDocument = document.lower().split() Given below is the code in python which will do the normalized TF calculation. Given below are the normalized term frequency for all the documents. Hence the normalized term frequency is 2 / 10 = 0.2. The total number of terms in the document is 10. For example in Document 1 the term game occurs two times.

A simple trick is to divide the term frequency by the total number of terms. Hence we need to normalize the document based on its size. On a large document the frequency of the terms will be much higher than the smaller ones. In reality each document will be of different size. Given below are the terms and their frequency on each of the document. Term Frequency also known as TF measures the number of times a term (word) occurs in a document. Let us go over each step in detail to see how it all works.

It means a query in which the terms of the query are typed freeform into the search interface, without any connecting search operators. Let us imagine that you are doing a search on these documents with the following query: life learning Document 1: The game of life is a game of everlasting learningĭocument 2: The unexamined life is not worth living I have created 3 documents to show how this works. In this post I will focus on the second part. The input query entered by the user should be used to match the relevant documents and score them. PageRank determines how trustworthy and reputable a given website is. The major reason for google’s success is because of its pageRank algorithm. The graph given below shows this astronomical growth. In 2012 this number shot up to 5.13 billion average searches per day. In the year 1998 Google handled 9800 average search queries every day.

0 kommentar(er)

0 kommentar(er)